Kortext: championing endorsed AI in higher education

Navigating principles-based AI guidance

UK National guidance on AI usage currently recommends a principles-based approach that can differ vastly between (and within) institutions, leading to ambiguity regarding permitted usage and purpose.

Policy ambiguity and AI shaming behaviours open the door to the use of shadow AI, defined as the unsanctioned use of AI tools without the prior approval or oversight of the institution.

The resulting impact is perpetuated AI stigma and inequitable usage within student bodies, not to mention data security risks, compliance issues and inaccurate information being included in student work.

Now, moving into 2026, the dialogue has shifted.

The conversation has progressed from the initial panic-based notion to ban AI tools, to how we go about finetuning policy to embrace this AI safely, securely and at scale, without missing this unprecedented opportunity to reduce administrative burden for faculty, improve student outcomes and prepare our students for a world where AI tools are integral to the productivity of 99% of the workforce.

Universities walk a fine line between mitigating risks and embracing transformative digital innovation, all whilst balancing security, compliance and reputation, all whilst under pressure from the conflicting opinions from students, staff and governing bodies.

So, what’s the solution?

First step: understanding AI use

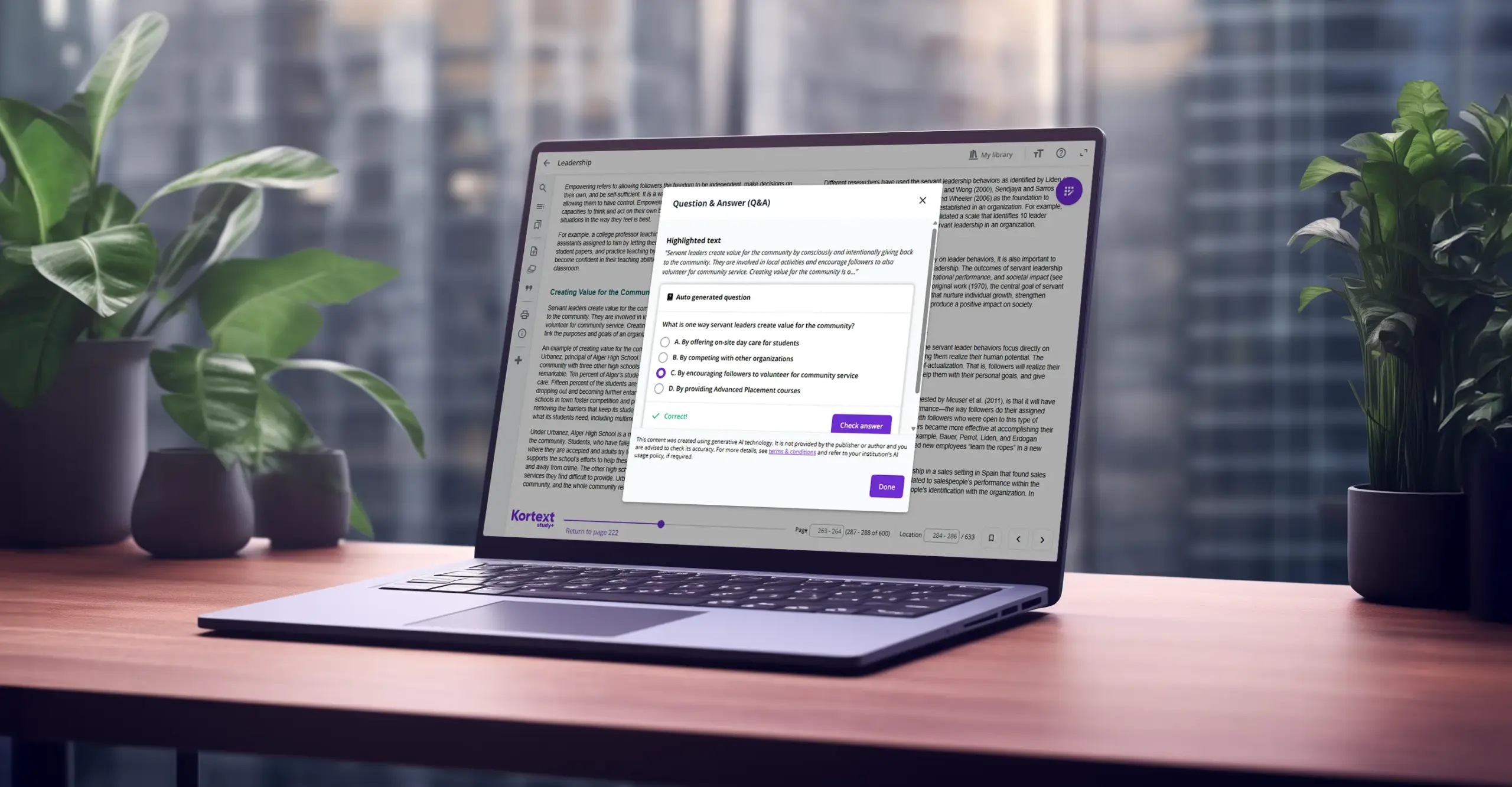

The first stage to finding the right AI tools for your institution is to really understand why and how students are using AI. The HEPI Student Generative AI Survey 2025 found that an overwhelming 92% of students are now using AI for 3 main purposes: to generate text; to enhance their writing; and to summarise, take notes and quiz themselves on their knowledge.

The next stage is to understand how we can provide this to students and educators safely, securely and ethically whilst upholding academic integrity and respecting publisher content.

Enter: Endorsed AI

At Kortext, we define endorsed AI as artificial intelligence technology that is designed to operate in an approved environment.

Kortext’s use of AI relies on the approval of multiple stakeholders before integrating AI into resources:

1. Institutions

It’s a central part of the process that institutions have knowingly approved the content that our AI draws upon. Each text available on a student’s Kortext bookshelf has been specifically requested by the teaching staff at the institution. This ensures that AI-generated responses are referenced with supporting source material, allowing for accountability, fact-checking and accurate citation in academic work.

We understand the need to introduce trustworthy, secure AI at scale, and quickly.

Institutions face the challenge of hiring developers, standing up LLMs, driving adoption of endorsed AI, all whilst balancing security, compliance, cost-effectiveness, and user trust.

By the time in-house technology has been built and implemented, technology has progressed and the race to keep pace is back on.

Kortext provides a unique approach that unifies content aggregation and integration at scale, accelerating adoption and time to value, enhancing the value of AI across learning ecosystems.

By partnering with Kortext, institutions also benefit from our partnership with Microsoft, combining our thorough higher education expertise, with Microsoft’s world class Fabric and Foundry capabilities to deliver scalable, out-of-the-box future-ready digital learning experiences that keep pace with evolving tech.

2. Kortext users

At Kortext, we believe that transparency is paramount to an endorsed AI product. It’s our commitment to be clear about what data is being collected and how it’s being used, with our AI never drawing from personal data and projecting it into the public sphere.

It’s crucial to us that our AI solutions support both sector and institutional policies on data and AI and commit to our responsibility as a data controller to keep information safe, secure and in the hands of the data owners, as detailed in our privacy policy.

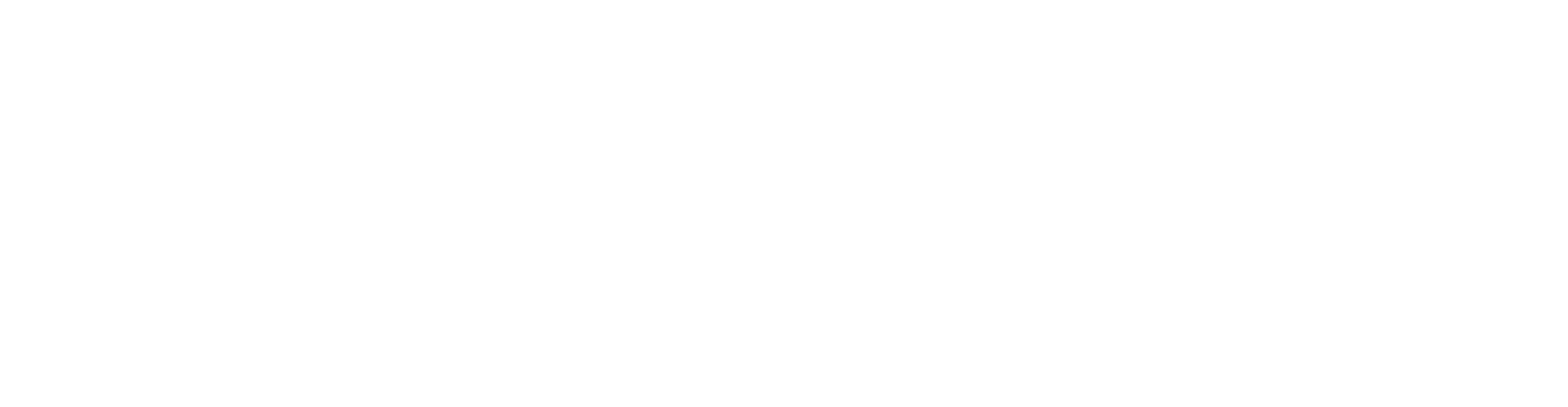

For the student, a disclaimer will appear when using study+ to summarise, create notes and generate Q&As that emphasises the need to fact check to ensure accuracy. This provides a reminder to student users to use AI responsibly and confirm they’re within the remit of their institution’s AI policies.

In addition, products like Kortext teach and Kortext fusion leverage AI to support the user in numerous ways.For example, AI is embedded in the experience itself, formulating a workflow for users as they teach and learn, cultivating a journey that supports academic integrity and guides learners along a lecturer-shaped path to student success.

Our solution

Leveraging our partnership with Microsoft, we’ve purpose-built a safe, secure solution that allows students to leverage the capabilities of generative AI within an institution-approved ringfenced environment that doesn’t replicate or redistribute publisher materials

This fosters appropriate use, reframing AI from a shortcut into a valuable learning tool, guiding students to interact more deeply with their licensed content whilst ensuring it remains protected from public-facing LLMs.

For leaders and educators, Kortext fusion offers a unique opportunity to harness AI within unified data, reducing administrative burden and visualising insights at institutional, cohort, or student level. The analytics drawn from the fusion foundation provide unprecedented oversight with transformative implications for institutional outcomes, financial sustainability and student success.

By providing a trusted, approved alternative to shadow AI applications, institutions can benefit from equitable access to AI tools, facilitate appropriate use of content and produce graduates who are well equipped for an AI-integrated workspace.

Book a discovery call to hear more about how Kortext fusion can power personalised and contextual AI applications for student and faculty usage at your institution.

Hear from Microsoft about how they’ve partnered with us at Kortext, to pioneer safe, secure AI in higher education.