Open AI vs trusted AI

The definitions, the potential, the key considerations and where Kortext sits in the debate.

Open AI – the key concepts

What do we mean by ‘open AI’? Our focus here is on AI text generators, also known as Large Language Models (LLMs), such as ChatGPT.

These tools have been trained on vast amounts of data from the internet, enabling them to respond to prompts with human-like outputs.

AI tools are not a new development. We take them for granted in everyday life – from search engines to grammar checkers to navigation apps.

However, the new generative AI tools are having a significant impact on how we live, learn and work.

‘The world faces an inflection point on AI. Large language models (LLMs) will introduce epoch-defining changes comparable to the invention of the internet.’

House of Lords Communications and Digital Committee, Large language models and generative AI.

Creative potential in education

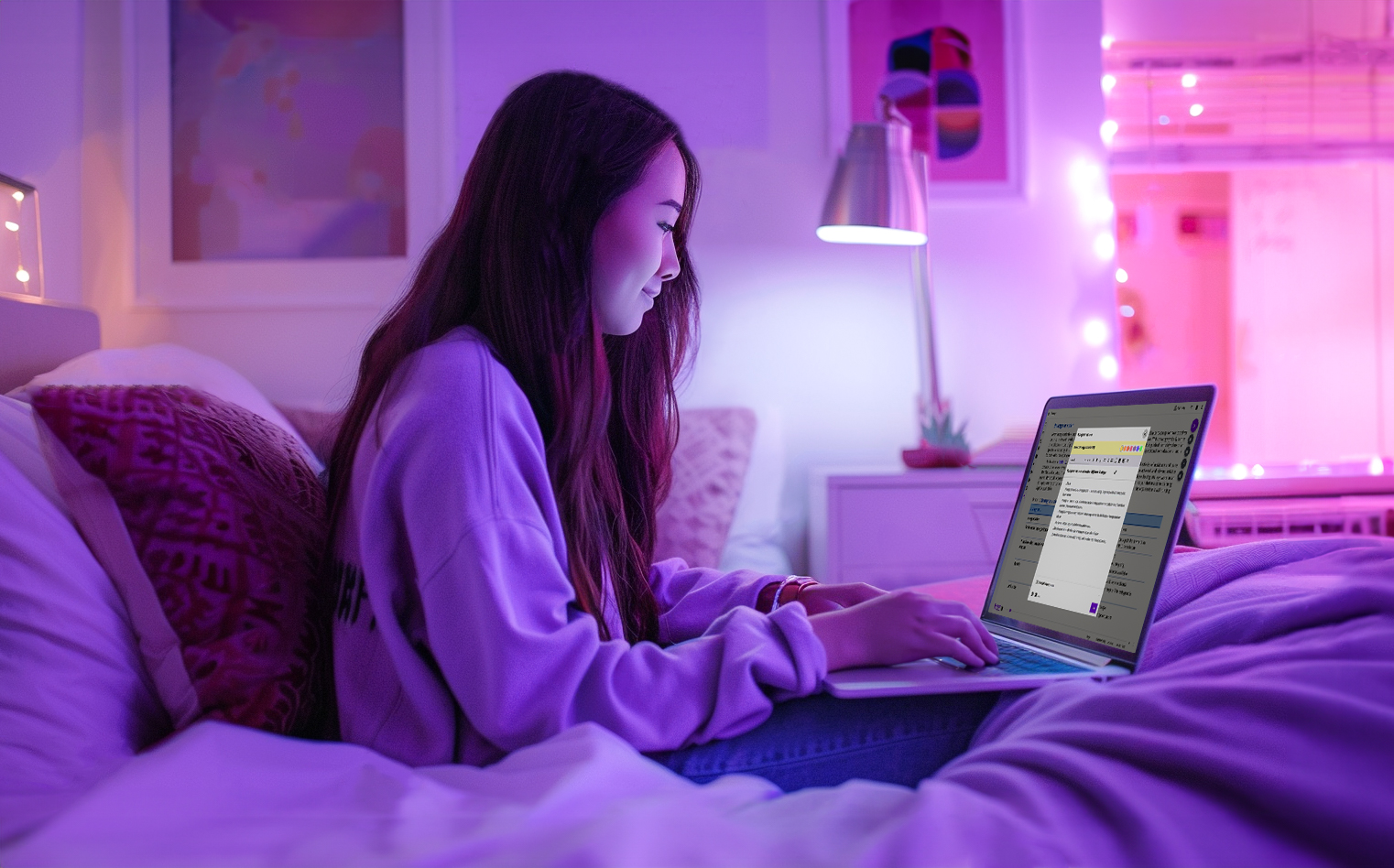

The impact of generative AI has been the topic of much debate in higher education, catalysed by the public release of ChatGPT on 30th November 2022.

Students have been quick to embrace the benefits, using the tools to help with tasks including planning, writing, translating, revising and researching.

Meanwhile, educators could utilise generative AI for lesson planning, designing quizzes, customising materials and providing personalised feedback.

Ethical considerations and pitfalls

But, there’s a catch – or rather several catches.

The data used to train AI text generators is scraped from a wide range of internet sources, not all of which are factually accurate.

These sources may contain human biases and stereotypes which are then reproduced in generated outputs.

Some LLMs create ‘hallucinations’, or plausible untruths, such as references to non-existent journal articles.

These issues have led to concerns from educators with fears that generative AI tools could undermine academic integrity.

‘More than a third of students who have used generative AI (35%) do not know how often it produces made-up facts, statistics or citations (‘hallucinations’).’

Josh Freeman, Provide or punish? Students’ views on generative AI in higher education.

Trusted AI – the key concepts

There have been moves – in the education sector and beyond – to regulate generative AI, enabling a shift from an open model to a more trusted model.

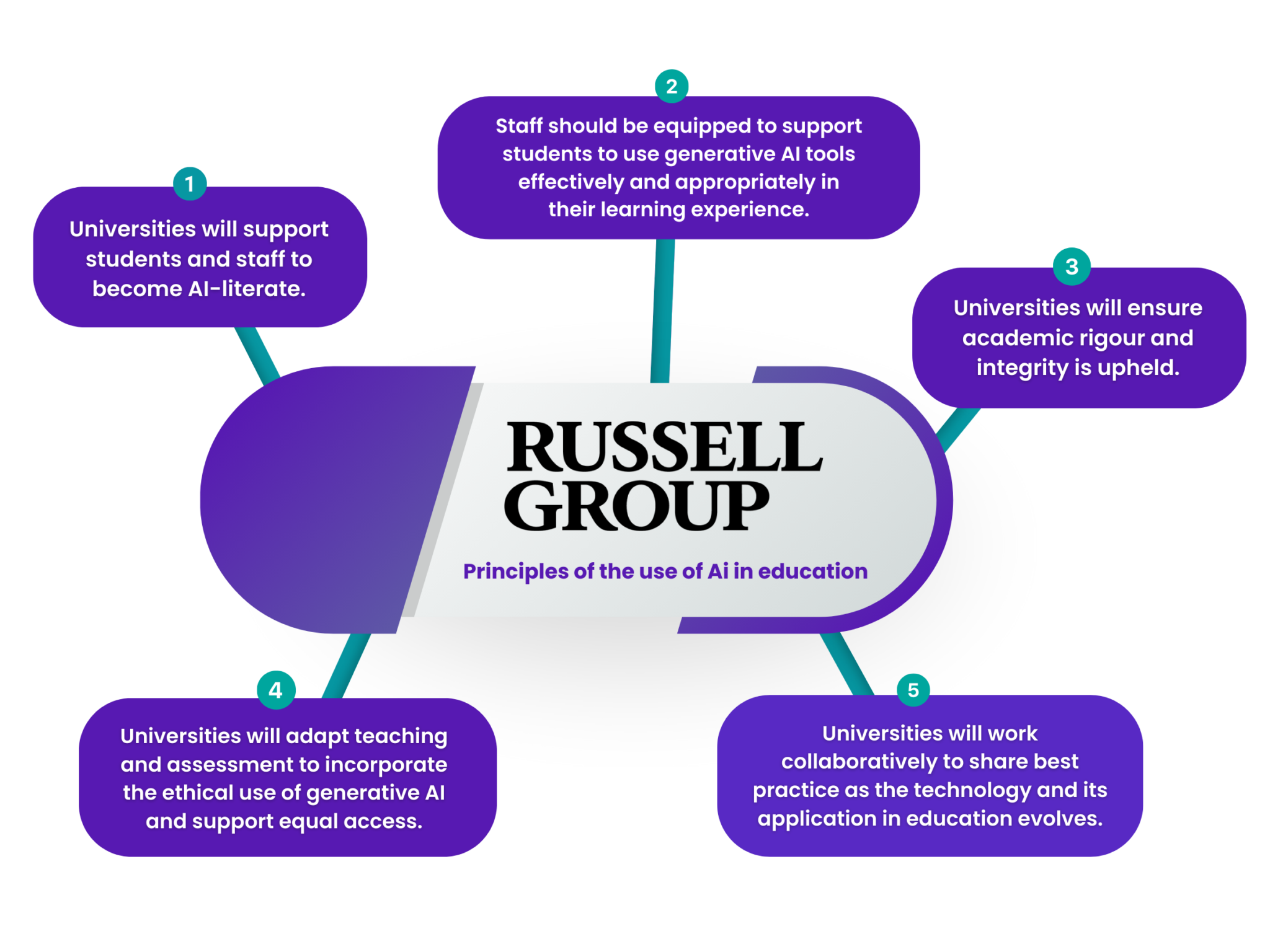

Within higher education, the Russell Group issued principles on the effective and appropriate use of AI tools within universities to enhance teaching practices and student learning experiences.

More broadly, Microsoft outlined six key principles ‘essential to creating responsible and trustworthy AI as it moves into mainstream products and services’.

What do students want?

Students want trusted AI incorporated into their education to prepare them for an AI-driven world.

A Jisc report found that students were concerned about ‘missing out on developing the AI skills they might require in future employment opportunities’.

They want universities to ‘bridge the gap’ between current curricula and the evolving job market, providing ‘training and guidance on responsible and effective AI use’.

Students ‘expressed a desire for institution-recommended tools that they can trust’, rather than relying on recommendations from friends.

‘Students are looking to their institutions to equip them with the necessary skills, training, tools, and guidance to navigate the world of generative AI in both education and employment.’

Introducing Kortext study+

Kortext study+ enables institutions to equip students with the capabilities of generative AI tools within a safe environment.

Our suite of next-generation AI-powered study tools is seamlessly integrated into the study platform as an upgrade.

Kortext study+’s trusted AI tools can summarise content, generate insightful study notes and create interactive Q&A in seconds.

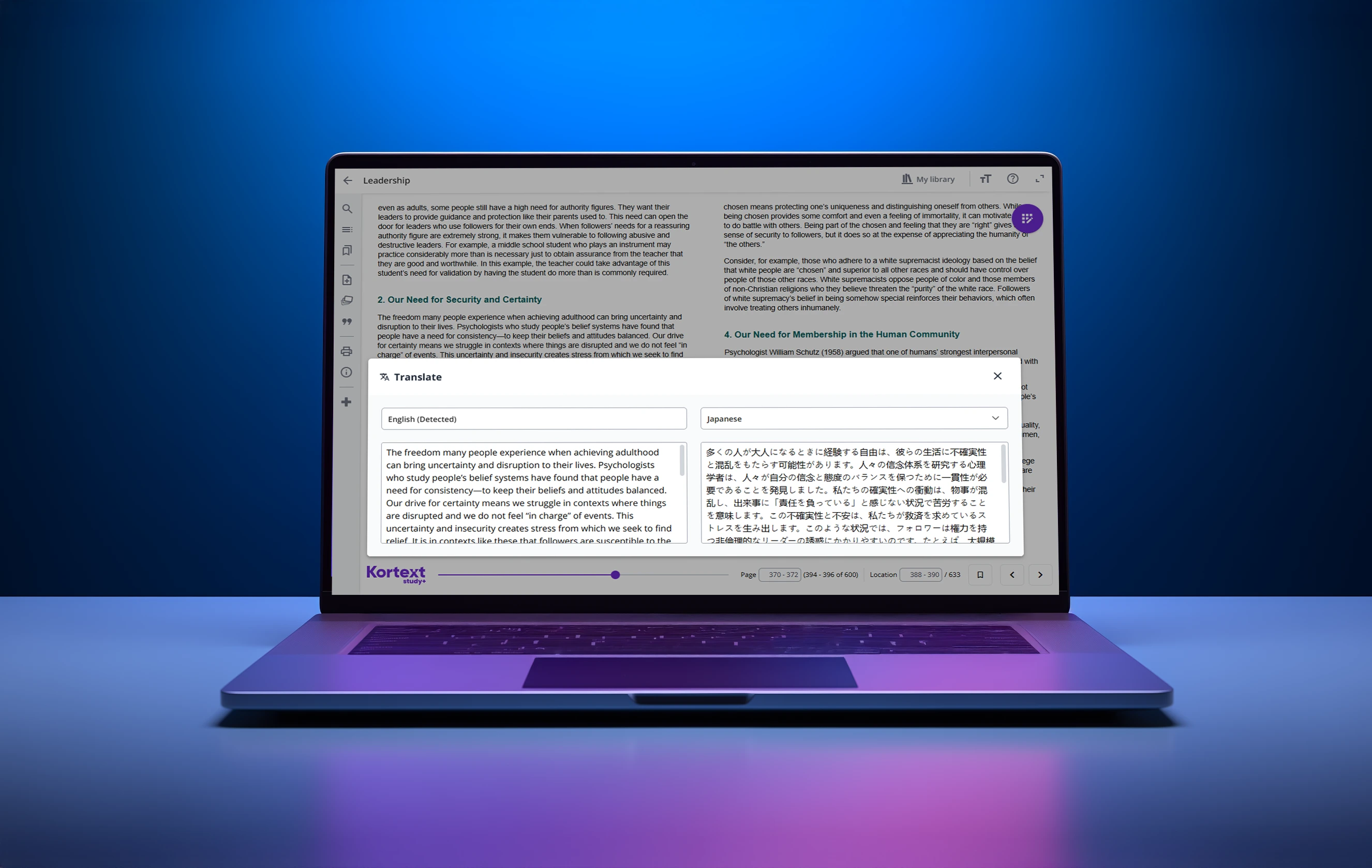

In addition, students can translate text into over 120 languages, supporting the global landscape of higher education.

Balancing trust & innovation

Kortext has worked extensively with Microsoft, as a Gold education partner, leveraging the latest technologies to embed the principles of responsible AI into the development of our innovative study tools.

Kortext’s AI product design principles:

- Transparent and explainable

- Bias mitigation

- Ethical use of data

- Inclusivity and accessibility

- Community guided

- Pedagogical alignment

- Feedback and iteration

Unlike open AI tools, our generative AI tools are applied to trusted content only – academic texts provisioned to a student by their institution and made available through the Kortext platform.

‘We are excited to be leading the sector with an unparalleled package of generative AI-powered study tools. We believe this new technology offers huge benefits for teaching and learning in higher education, and – informed by extensive sector research – we have delivered on the desire for students and educators to explore AI through institution-aligned tools.’

James Gray, CEO, Kortext.

Trusted by globally respected universities

Speak to us about generative AI

Want to know more about how we innovative using the latest developments in artificial intelligence?

Submit the form today and we’ll reach out to schedule a discovery call with a member of our partnership team.